The Importance of AI Security for HIPAA Compliance in 2025

There’s a new reality that many in healthcare recognize: we are embracing artificial intelligence (AI) technologies faster than we are securing them.

As AI technologies rapidly advance and become incorporated within the healthcare workflow, a significant gap has emerged between these innovative tools and the existing policies designed to protect sensitive health information under HIPAA.

In this article, we explore why HIPAA compliance is more important in the era of AI and how organizations can effectively apply the HIPAA Security Rule to AI security settings.

How AI-related Security Risks Affect HIPAA Compliance

The reality is that healthcare providers, health insurers, covered entities, and their business associates must ensure they are meeting their responsibilities under HIPAA while preventing unauthorized exposure to patient data.

The HIPAA Security Rule plays a critical role in providing guidelines for how electronic protected health information (ePHI) is safeguarded. As AI adoption increases, protecting patient health information becomes crucial due to the potential risks of unauthorized access and security breaches that AI introduces.

What is obvious is that as healthcare organizations integrate the use of AI into their workflow systems, there is a disconnect between the AI security settings and the policies and protections designed to govern them.

Utilizing these tools without compromising data security is a current challenge for healthcare organizations that strive to keep ePHI data secure at rest and in transit. Since many of the AI tools are cloud-based applications, there becomes a greater balance between making valuable data available and keeping sensitive information secure, indicating that it is essential to prioritize operating policies and governance related to AI.

AI Adoption is Outpacing Data Governance Efforts

Incorporating AI into healthcare workflows requires strong data governance to ensure that innovations align with privacy and security standards. A recent TechTarget report highlights the surge in AI-related security threats, including new malware attacks that exploit vulnerabilities in the systems. The lack of comprehensive security implementations around AI tools increases the risk of unauthorized access to sensitive data.

Read the full story by TechTarget

New research from IBM and the Ponemon Institute shows that AI adoption is progressing without including the necessary security and governance measures. In the Cost of a Data Breach Report 2025, they report that:

- 97% of organizations that reported an AI-related breach also lacked robust AI access controls, leading to compromised applications, APIs, or plugins.

- 63% of organizations either lacked AI governance policies or were still in the process of developing them, even as their workforce utilized AI tools.

This indicates that the gap between AI governance and AI usage incurs significant costs. When organizations fail to implement governance policies to manage and mitigate risks, they face additional operational and security challenges.

The Department of Health and Human Services (HHS), which is responsible for enforcing HIPAA, has yet to provide specific guidelines regarding the application of the HIPAA Security Rule within the context of artificial intelligence (AI) tools. Nevertheless, it is still possible to draw upon the existing HIPAA Security Rule to inform security responsibilities and policies. This framework can also help establish governance practices for the implementation and use of AI tools in healthcare.

Despite this absence, we can confidently rely on the established principles of the HIPAA Security Rule to shape AI security responsibilities and policies. By doing so, the security controls of AI can be effectively governed, ensuring that innovation is both safe and compliant with established regulations.

Learn more about proposed changes in our recent blog post.

HIPAA Wasn’t Built for AI, But Your Organization Can Be

Successfully balancing AI deployments with HIPAA compliance presents a unique set of opportunities and challenges. To navigate these effectively, it’s essential to first develop a strong awareness of the potential risks involved. Following this, implementing best practices can help mitigate pitfalls and ensure a smoother integration of AI technologies within the framework of HIPAA regulations.

As the TechTarget article underscores, many organizations lack clear internal policies for how AI tools interact with sensitive data. Nearly half of the surveyed organizations did not monitor how employees use generative AI in their daily workflow, presenting a greater challenge for the compliance team, which is responsible for monitoring and maintaining standards for sensitive data protection required under the HIPAA Security Rule.

As AI tools increasingly play a significant role in employees’ daily tasks, it becomes essential for companies to instigate stronger policies that clearly outline the acceptable use of AI and the data that these tools rely on to function. They must also invest in training employees on how to use these tools effectively while committing to safeguarding ePHI.

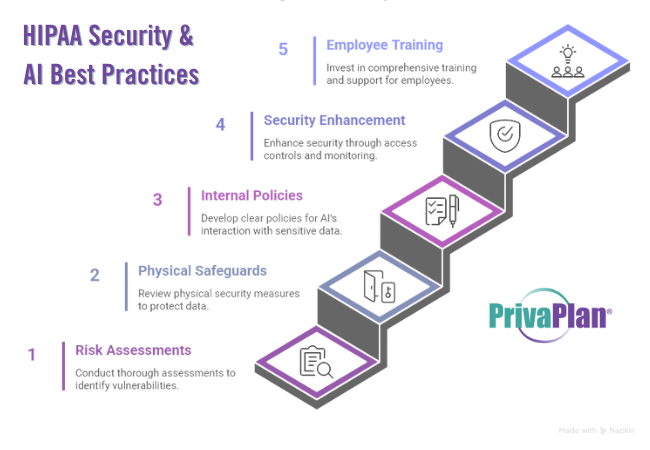

Even though the HIPAA Security Rule was established before the rise of AI, the rules for using and disclosing ePHI, along with security expectations for configured settings, can still be applied. Other important HIPAA Security aspects include:

- Conduct and document thorough risk assessments to identify vulnerabilities

- Review physical safeguards as part of the risk assessment.

- Develop clear internal policies governing AI’s interaction with sensitive data, including data integrity and management over time.

- Enhance security by thoroughly reviewing access controls, logging practices, monitoring systems, and incident reporting protocols.

- Invest in comprehensive employee training and unwavering support, empowering your workforce to excel.

The Compliance Guide to Navigate Settings with Confidence

David Ginsberg, CEO of PrivaPlan Associates, emphasizes,

“Healthcare innovation must go hand in hand with privacy and security. Our new guide empowers organizations to harness the potential of generative AI while staying fully compliant with HIPAA and NIST standards. It’s about enabling smarter, faster, and safer care delivery through responsible AI adoption.”

Our AI Compliance Guide for the HIPAA Security Rule is the first of its kind: a practical, actionable toolkit to help healthcare organizations, compliance leaders, and IT pros navigate the risks, requirements, and responsibilities of AI under the HIPAA Security Rule, ensuring that AI adoption does not compromise the security of electronic health records or other sensitive health information.

What’s Inside?

- Clear breakdowns of how to establish security policies for AI tools within the HIPAA Security Rule framework.

- Risk analysis methods tailored to AI applications such as Ambient Scribes.

- Guidance on third-party AI vendors, cloud-based AI tools, the importance of Business Associate Agreements (BAAs).

Guide Your Organization’s AI Adoption with Governance

The HIPAA Security Rule protections for patient health information through technical and nontechnical safeguards enable covered entities to implement effective privacy and security measures by providing frameworks, policies, and technological safeguards.

It is essential to protect health information in electronic form, ensuring that electronic health records and digital transmissions of PHI remain secure. By doing so, covered entities and their business associates can maintain HIPAA compliance while benefiting from AI innovations.

A quick Security Risk Assessment with us can uncover the small cracks before they become big problems.

Our Security Risk Assessments deliver actionable insights to safeguard your infrastructure, data, and brand reputation.

Schedule your assessment today and take the first step toward a more secure, compliant future.